As a fan of the scientific method, and a celebrator of honesty in public life, looking back over the past few weeks in British politics makes me want to weep.

Can I leave aside for a second the naked ambition and frankly sociopathic behaviour of a few power-hungry Etonites. I guess we should expect that. (And anyway they still make me too angry to think straight.)

Instead, let’s just look at how, as a population, we have behaved when it comes to intelligently sharing and filtering facts and information. As so many people have said, in the run-up to the referendum, the fact that some claims were founded on empirical truth, and some on lies, didn’t seem to feature at all when people were making their decision. The fact that some claims had been falsified by nationally recognised people and organisations made no difference at all.

We seem to have participated in a mass frontal lobotomy — an astounding sudden forgetting of how to think properly. (And there has been no shortage of terrified well-educated people pointing out that the last time there was a collective abandoning of intellectual principles of this magnitude was in the 1930s…)

But look at the irony. Here we are, armed with a global communications network that even our most prescient ancestors could hardly have dreamed of, and yet we have managed to increase our ignorance, and allow obvious lies and rumours to rule unchecked.

The tragedy is, that although we have this almost magical asset, the internet — which gives us the ability to instantly and effortlessly pass on data, insight and ideas to each other, and have them flow without government or corporate control from person to person — we have let it become a high-speed rumour mill. Maybe that shouldn’t come as a surprise but I can’t help thinking it’s the most amazing own goal.

I’m not sure what the best way to address this is, and it’s certainly going to take a long time for us as a population to collectively wake up, gen up, and re-learn the good old lessons of empirical free-thinking from our enlightenment predecessors.

But there is one thing that occurred to me that might just help and that is “trust networks”. I’m not an expert on them, and there are many geniuses our there working on this right now who know much more than I do. But it strikes me that if we had had in place a strong internet-based trust network (and one that is federated — i.e. not run by a single company), we may not have circulated quite as many shoddy claims as we did.

While I was based at the Hub, Islington (a fascinating crucible of all sorts of people creating projects and startups) I had some memorable conversations about how such networks might work. (FYI: I was running Dynamic Demand at the time, and joining in conversations that would soon become Demand Logic.)

As far as I understand it, the principle is a little like Google’s Page Rank — the “importance score” that Google’s robots allow to flow from page to page, through links, in order to evaluate what pages searchers are likely to find interesting.

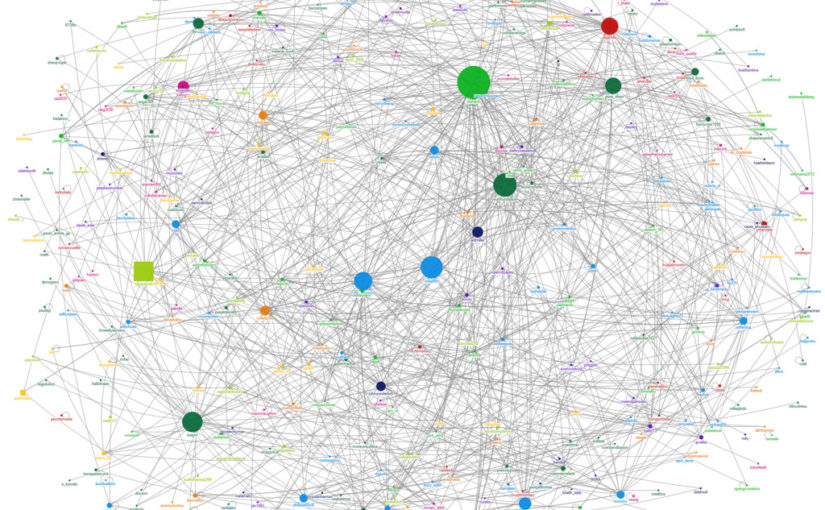

We all have people we trust to speak on certain topics, and they have people they trust, and so on. Trust networks are ways to score information and ideas on their credibility, based on their long journey from originator to you, through a complex ‘web of trust’. If a post, idea, claim etc comes to your attention, it should be tagged with a trust score based on who it came from and who it was circulated by.

In some implementations, you would not only see how likely something was to be factually correct (due to it having had credibility added to it by the people you trust to assess this kind of thing, and the people they trust…) but you would also see an instant picture of the ethical ramifications of, say a new policy idea, because it would also carry scores accumulated from people with whom you share some ethical fundamental principles.

There are some obvious dangers. For example, there could be golden nuggets (especially novel, disruptive ideas) that could get crushed too early if the network reacts wrongly. And such a system, if not cleverly designed, might result in a strengthening of ‘silos of thought’ and ethical divisions. And of course the running of such networks needs to be open, honest and free from interest — no small ask. But these issues can’t be beyond our wits to sort out.

It think it’s time to think seriously about open trust networks. Surely any tool to help us out of the vicious rumour mill has got to be worth a try?

(Written a little too rapidly in one of Stoke Newington’s many wonderful cafes while recovering from a rather horrid dental appointment…)